AMD rolls out new, more expensive mega-cache Epyc server processors • The Register

AMD has announced its latest more expensive Epyc server processors, dubbed Milan-X, to extend the chip giant’s lead over Intel for technical computing applications. The key call is driven by a massive amount of fused cache, a major leap for HPC and other demanding areas.

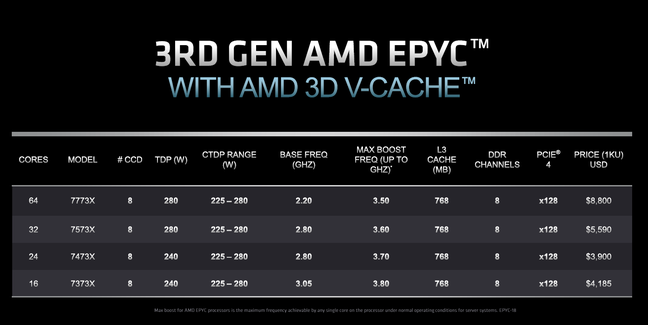

The four new microprocessors range from 16 to 64 CPU cores and represent a refresh of AMD’s third-generation Epyc chips that debuted last year. Above all, they bring cache: an unprecedented 768MB at L3, far more than the 256MB found in comparable 2021 devices.

The company claimed that this additional cache allows the new Epyc chips to run 23 to 88 percent faster than Intel’s latest Xeon processors for servers running technical computing applications from leading vendors Ansys and Altair. AMD said it could also significantly reduce the number of servers and the electricity needed to do the same amount of work.

But in exchange, the wholesale price of these chips represents a “modest premium” over Epyc processors with similar attributes, with an additional cost ranging from $664 to $1,000. We suspect these bounties may be passed on by server manufacturers, such as Dell, Hewlett Packard Enterprise, Lenovo, Supermicro, and others that offer Milan-X chips at launch. Microsoft Azure is also offering the new chips, which will replace last year’s Epyc processors for its Azure HBv3 virtual machines.

The transition should be rather seamless, however, as the new chips are compatible with AMD’s existing platform for third-generation Epyc processors. This means there should be no issues running software on the new chips, and server manufacturers and cloud providers will only need to update the BIOS for existing server versions. .

Ram Peddibhotla, Vice President of Epyc Product Management at AMD, said The register the performance and cost savings of the Milan-X chips far outweigh their higher price.

“There is tremendous value that is driven by cache size which in turn determines the size of the performance gains. So I think a modest premium is more than rewarded by this performance boost,” said- he declared.

Monster Cache is courtesy of AMD’s 3D V-Cache technology, which is one of the first use cases of the new Stacking of 3D matrices Technology. That’s why the new line goes by the somewhat awkwardly named “Third Generation AMD Epyc Processors with AMD 3D V-Cache Technology”.

The technology, which is also in AMD’s upcoming Ryzen 7 5800X3D processor for PC gaming, brings an additional 96MB of L3 cache for each group of cores on the processor, also known as core complex dies. That extra 96MB is triple the L3 cache of what’s in every core complex of the original third-generation Epyc processors released last year. And with the four new Milan-X processors each featuring eight-core complexes, the total L3 cache reaches 768MB per processor.

What Peddibhotla said is particularly interesting is the flexibility in how the 96MB of L3 cache can be shared between the cores of each core complex. For the new 64-core 7773X EPUC, if an application requests the use of all eight cores on each core complex, each core will have access to 12 MB of cache. But if an application only needs one core in a complex, it can be allowed to access all 96MB on that core complex, which means low core count models naturally have a relationship cache per core higher at all times.

“It really goes a long way in lightly threaded applications,” he said.

L3 cache is the only big difference between the four new processors and last year’s comparable “F-series” models that have higher clocks. They come with the same Zen 3 architecture on the same 7nm manufacturing process, with support for PCIe Gen4 connectivity and up to eight channels of DDR4-3200 memory. They also have the same thermal envelopes and silicon-based security features like Secure Memory Encryption.

But Peddibhotla pointed out that the massive L3 cache has great benefits for technical computing, allowing two-socket servers to have over 1.5 GB of total L3 cache. Let’s be honest: that last fact actually made Peddibhotla feel a little giddy.

“Every time I say that I look back and say, holy cow, I don’t think I expected to say ‘gigabyte’ and ‘cache’ in the same sentence,” he said.

Peddibhotla wanted to clarify that the additional cache will not impact non-cache-aware applications, which are plentiful in the data center market.

More importantly, he said, the technical computing applications that AMD is targeting with the new Milan-X chips are critical for many companies, from automakers to chipmakers like AMD. Other industries that use this type of software include chemical engineering, finance, energy, and life sciences.

“These are the most complex and demanding workloads in the data center. And it’s actually usually the main focus of the product design that’s at the core of the business. So the “Technical computing, workloads are really not supporting applications. Not incidental. They really go to the essence of what businesses actually do,” he said.

These applications include electronic design automation, which is used for chip design, and computational fluid dynamics, which is used to design things like planes, trains, and automobiles. The company is also continuing finite element analysis and structural analysis with the new Milan-X chips.

To give us an idea of how the extra cache can make a difference, AMD has provided an example server running Synopsys’ VCS software used for chip design. A server using last year’s 16-core Epyc 73F3 was able to complete 24.4 tasks per hour, while a server with the new 16-core Epyc 7373X could complete 40.6 tasks per hour, which which translated to 66% faster performance.

AMD also provided competitive comparisons to Intel’s third-generation Xeon processors released last year. Compared to Intel’s top line, the 40-core Xeon 8380, AMD’s new 64-core Epyc 7773X delivered up to 44% better performance using Altair Radioss for structural analysis, up to 47% better using Ansys Fluent for fluid dynamics, up to 69% better using Ansys LS-DYNA for finite element analysis and up to 96% better using Ansys CFX for fluid dynamics.

According to Peddibhotla, structural analysis, fluid dynamics, and finite element analysis applications can perform better with more cores, which is one of the reasons, besides the larger cache, why the new 64 AMD cores has higher performance.

AMD’s rendering of its latest Epyc processor. The central silicon die in the package is the IO controller, and the dies around it are the core processor complexes

The company has shown that its processors can compete with Intel heart-to-core. Compared to Intel’s 32-core Xeon 8352, AMD’s new 32-core Epyc 7573X could perform up to 23% better with Ansys Fluent, up to 37% better with Altair Radioss, up to 47% better with Ansys LS-DYNA and up to 88% better using Ansys CFX.

Some applications, like electronic design automation and some fluid dynamics software, rely more on pure power, measured in gigahertz, than on core count, which is why the company is also launching the Epyc 7373X at 16 cores and the 24-core Epyc. 7473X. However, the company did not provide competitive performance comparisons for these models.

Peddibhotla said the performance benefits of AMD’s new cache-rich chips can translate to “incredible savings and acquisition costs.”

In an example drawn up by AMD, the company said that 10 servers running Intel’s new 32-core Epyc 7573X could do the same amount of work as 20 servers running Intel’s 32-core Xeon 8362. This means AMD can essentially cut the amount of servers and electricity required by about half, according to the company, which can also reduce total upfront and ongoing costs by 51% for three years.

Peddibhotla touted this as a way for companies to improve their competition, save money, and even meet trending sustainability goals.

“A company that adopts Milan-X can, of course, just reap those savings, but they can also reinvest them directly into their business to give their designers a lot more performance and the ability to run a lot more design cycles and this, in turn, will lead to higher quality products and faster time to market,” he said.

“That’s the key for us: when you provide that kind of cost savings and that kind of design acceleration, it directly translates into a competitive advantage for these companies.” ®

Rent now, maybe buy later?

Microsoft Azure is the first among major clouds to announce that it offers AMD’s Milan-X processors as a service, Brandon Vigliarolo writes.

The Windows Giant said monday silicon is generally available in its HBv3 virtual machines.

“Microsoft and AMD share a vision for a new era of high-performance computing,” said Mark Russinovich, CTO of Microsoft Azure. “One defined by the rapid improvement of Azure’s HPC and AI platforms.”

He also mentioned a number of use cases for 3D V-Cache enabled HBv3 machines, from fluid dynamics and weather simulation to training in AI inference and energy research.

Azure subscribers can launch Milan-X chips in the cloud today, provided they are in the US East, US South Central, or Western Europe regions. Those in the southern UK, South East Asia, North China 3 and Central India will have to wait until Q2 2022. If you are not in one of these regions, sorry: you probably won’t get this unless Microsoft expands it further.

Comments are closed.